Causation

The question, “What is causation?” may sound like a trivial question—it is as sure as common knowledge can ever be that some things cause another, that there are causes and they necessitate certain effects. We say that we know that what caused the president’s death was an assassin’s shot. But when asked why, we will most certainly reply that it is because the latter was necessary for the former—which is an answer that, upon close philosophical examination, falls short of veracity. In a less direct way, the president’s grandmother’s giving birth to his mother was necessary for his death too. That, however, we would not describe as this death’s cause.

The first section of this article states the reasons why we should care about causation, including those that are non-philosophical. Sections 2 and 3 define the axis of the division into ontological and semantic analyses, with the Kantian and skeptical accounts as two alternatives. Set out there is also Hume’s pessimistic framework for thinking about causation—since before we ask what causation is, it is vital to consider whether we can come to know it at all.

Section 4 examines the semantic approaches, which analyze what it means to say that one thing causes another. The first, the regularity theories, nonetheless turns out to be problematic when dealing with unrepeatable and implausibly enormous cases, among many. Some of these theories limit the ambitions of Lewis’s theory of causation as a chain of counterfactual dependence, and also suffer from the causal redundancy and causal transitivity objections. Although the scientifically-minded interventionists try to reconnect our will to talk in terms of causation with our agency, probability theories accommodate the indeterminacy of quantum physics and relax the strictness of exceptions-unfriendly regularity accounts. Yet they risk falling into the trap of confounding causation and probability.

The next section brings us back to ontology. Since causation is hardly a particular entity, nominalists define it with recurrence over and above instances. Realists bring forward the relation of necessitation, seemingly in play whenever causation occurs. Dispositionalism claims that to cause means to dispose to happen. Process theories base their analysis on the notions of process and transmission—for instance, of energy, which might capture well the nature of causation in the most physical sense.

Another historically significant family of approaches is the concern of Section 6, which examines how Kant removes causation from the domain of things-in-themselves to include it in the structure of consciousness. This has also inspired the agency views which claim agency is inextricably tied up with causal reasoning.

The last, seventh section, deals with the most skeptical work on causation. Some, following Bertrand Russell, have tried to get rid of the concept altogether, believing it a relic of a past and timeworn metaphysical speculation. Pluralism and thickism see the ill fate of any attempt at defining causation in that what the word can mean is in fact a bundle of different concepts, or not any single and meaningful one at all.

Table of Contents

What Is Causation and Why Do People Care?

Hume’s Challenge

A Family Tree of Causal Theories

Semantic Analyses

Regularity Theories

The Problem of Implausibly Enormous Cases

The Problem of the Common Cause

The Problem of Overdetermination

The Problem of Unrepeatability

Counterfactual Theories

The Problems of Common Cause, Enormousness and Unrepeatability

The Problem of Causal Redundancy

A New Problem: Causal Transitivity

Interventionism

Probabilistic Theories

Ontological Stances

Nominalism

Realism

Dispositionalism

Process Theories

Kantian Approaches

Kant Himself

Agency Views

Skepticism

Russellian Republicanism

Pluralism and Thickism

References and Further Reading

1. What Is Causation and Why Do People Care?

Causation is a live topic across a number of disciplines, due to factors other than its philosophical interest. The second half of the twentieth century saw an increase in the availability of information about the social world, the growth of statistics and the disciplines it enables (such as economics and epidemiology), and the growth of computing power. This led, at first, to the prospect of much-improved policy and individual choice through analysis of all this data, and especially in the early twenty-first century, to the advent of potentially useful artificial intelligence that might be able to achieve another step-change in the same direction. But in the background of all of this lurks the specter of causation. Using information to inform goal-directed action often seems to require more than mere extrapolation or projection. It often seems to require that we understand something of the causal nature of the situation. This has seemed painfully obvious to some, but not to others. Increasing quantities of information and abilities to process it force us to decide whether or not causation is part of this march of progress or an obstacle on the road.

So much for why people care about causation. What is this thing that we care about so much?

To paraphrase the great physicist Richard Feynman, it is safe to say that nobody understands causation. But unlike quantum physics, causation is not a useful calculating device yielding astoundingly accurate predictions, and those who wish to use causal reasoning for any actual purpose do not have the luxury of following Feynman’s injunction to “shut up and calculate”. The philosophers cannot be pushed into a room and left to debate causation; the door cannot be closed on conceptual debate.

The remainder of this section offers a summary of the main elements of disagreement. The next section presents a “family tree” of different historical and still common views on the topic, which may help to make some sense of the state of the debate.

Some philosophers have asked what causation is, that is, they have asked an ontological question. Some of these have answered that it is something over and above (or at least of a different kind from) its instances: that there is a “necessitation relation” that is a universal rather than a particular thing, and in which cause-effect pairs participate, or of which they partake, or something similar “in virtue” of which they instantiate causation (Armstrong, 1983). These are realists about causation (noting that others discussed in this paragraph are also realists in a more general sense, but not about universals). Others, perhaps a majority, believe that causation is something that supervenes upon (or is ultimately nothing over and above) its instances (Lewis, 1983; Mackie, 1974). These are nominalists. Yet others believe that it is something somewhat different from either option: a disposition, or a bundle of dispositions, which are taken to be fundamental (Mumford & Anjum, 2011). These are dispositionalists.

Second, philosophers have sought a semantic analysis of causation, trying to work out what “cause” and cognates mean, in some deeper sense of “meaning” than a dictionary entry can satisfy. (It is worth bearing in mind, however, that the ontological and semantic projects are often pursued together, and cannot always be separated.) Some nominalists believe it is a form of regularity holding between distinct existences (Mackie, 1974). These are regularity theorists. Others, counterfactual theorists, believe it is a special kind of counterfactual dependence between distinct existences (Lewis, 1973a), and others hold that causes raise the probability of their effects in a special way (Eells, 1991; Suppes, 1970). Among counterfactual theorists are various subsets, notably interventionists (for example, Woodward, 2003) and contrastivists (for example, Schaffer, 2007). There is also an overlapping subset of thinkers with a non-philosophical motivation, and sometimes background, who develop technical frameworks for the purpose of performing causal inference and, in doing so, define causation, thus straying into the territory of offering semantic analysis (Hernán & Robins, 2020; Pearl, 2009; Rubin, 1974). Out of kilter with the historical motivation of those approaching counterfactual theorizing from a philosophical angle, some of those coming from practical angles appear not to be nominalists (Pearl & Mackenzie, 2018). Yet others, who may or may not be nominalists, hold that causation is a pre-scientific or “folk science” notion which, like “energy”, should be mapped onto a property identified by our current best science, even if that means deviating from the pre-scientific notion (Dowe, 2000).

Third, there are those who take a Kantian approach. While this is an answer to ontological questions about causation, it is reasonably treated in a separate category, different from the ontological approach mentioned first above in this section, because the question Kant tried to answer is better summarized not as “What sort of thing is causation?” but “Is causation a thing at all?” Kant himself thought that causation is a constitutive condition of experience (Kant, 1781), thus not a part of the world, but a part of us—a way we experience the world, without which experience would be altogether impossible. Late twentieth-century thinkers suggested that causation is not a necessary precondition of all experience but, more modestly, a dispositional property of us to react in certain ways—a secondary property, like color—arising from the fact that we are agents (Menzies & Price, 1993).

The fourth approach to causation is, in a broad sense, skeptical. Thus some have taken the view that it is a redundant notion, one that ought to be dispensed with in favor of modern scientific theory (Russell, 1918). Such thinkers do not have a standard name but might reasonably be called republicans, following a famous line of Bertrand Russell’s (see the first subsection of section 7.). Some (pluralists) believe that there is no single concept of causation but a plurality of related concepts which we lump together under the word “causation” for some reason other than that there is such a thing as causation (Cartwright, 2007; Stapleton, 2008). Yet another view, which might be called thickism and which may or may not be a form of pluralism, holds that causal concepts are “thick”, as some have suggested for ethical concepts (Anscombe, 1958; although Anscombe did not use this term herself). That is, the fundamental referents of causal judgements are not causes, but kicks, pushes, and so forth, out of which there is no causal component to be abstracted, extracted, or meaningfully studied (Anscombe, 1969; Cartwright, 1983).

Cutting across all these positions is a question as to what the causal relata are, if indeed causation is a relation at all. Some say they are events (Lewis, 1973a, 1986); others, aspects (Paul, 2004); or others, facts (Mellor, 1995), among other ideas.

Disagreement about fundamentals is great news if you are a philosopher, because it gives you plenty to work on. It is a field of engagement that has not settled into trench warfare between a few big guns and their troops. It is indicative of a really fruitful research area, one with live problems, fast-paced developments, and connections with real life—that specter that lurks in the background of philosophy seminar rooms and lecture halls, just as causation lurks in the background of more practical engagements.

However, confusion about fundamentals is not great news if you are trying to make the best sense of the data you have collected, looking for guidance on how to convince a judge that your client is or is not liable, trying to make a decision about whether to ban a certain food additive or wondering how your investment will respond to the realization of a certain geopolitical risk. It is certainly not helpful if one is trying to decide what will be the most effective public health measure to slow the spread of an epidemic.

2. Hume’s Challenge

David Hume posed the questions that all the ideas discussed in the remainder of this article attempt to answer. He had various motivations, but a fair abridgement might be as follows.

Start with the obvious fact that we frequently have beliefs about what will happen, or is happening elsewhere right now, or has happened in the past, or, more grandly, what happens in general. One of Hume’s examples is that the sun will rise tomorrow. An example he gives of a general belief is that bread, in general, is nourishing. How do we arrive at these beliefs?

Hume argues that such beliefs derive from experience. We believe the sun rises because we have experienced it rising on all previous mornings. We believe bread is nourishing because it has always been nourishing when we have encountered it in our experience.

However, Hume argues that this is an inadequate justification on its own for the kind of inference in question. There is no contradiction in supposing that the sun will simply not rise tomorrow. This would not be logically incompatible with previous experience. Previous experience does not render it impossible. On the contrary, we can easily imagine such a situation, perhaps use it as the premise for a story, and so forth. Similar remarks apply to the nourishing effects of bread, and indeed to all our beliefs that cannot be justified logically (or mathematically, if that is different) from some indisputable principles.

In arguing thus, Hume might be understood as reacting to the rationalist component of the emerging scientific worldview, that component that emphasized the ability of the human mind to reach out and understand. Descartes believed that through the exercise of reason we could obtain knowledge of the world of experience. Newton believed that the world of experience was indeed governed by some kind of mathematical necessity or numerical pattern, which our reason could uncover, and thus felt able to draw universal conclusions from a little, local data. Hume rejected the confidence characteristic of both Descartes and Newton. Given the central role that this confidence about the power of the human mind played in the founding of modern science, Hume, and empiricists more generally, might be seen as offering not a question about common sense inferences, but a foundational critique of one of the central impulses of the entire scientific enterprise—perhaps not how twentieth and twenty-first-century philosophers in the Anglo-American tradition would like to see their ancestry and inspiration.

Hume’s argument was simple and compelling and instantiated what appears to be a reasonably novel argumentative pattern or move. He took a metaphysical question and turned it into an epistemological one. Thus he started with “What is necessary connection?” and moved on to “How do we know about necessary connection?”

The answer to the latter question, he claimed, is that we do not know about it at all, because the only kind of necessity we can make sense of is that of logical and mathematical necessity. We know about the necessity of logic and mathematics through examining the relevant “ideas”, or concepts, and seeing that certain combinations necessitate others. The contrary would be contradictory, and we can test for this by trying to imagine it. Gandalf is a wizard, and all wizards have staffs; we cannot conceive of these claims being true and yet Gandalf being staff-less. Once we have the ideas indicated in those claims, Gandalf’s staff ownership status is settled.

Experience, however, offers no necessity. Things happen, while we do not perceive their being “made” to happen. Hume’s argument to establish this is the flip side of his argument in favor of our knowledge of a priori truths. He challenges us to imagine causes happening without their usual effects: bread not to nourish, billiard balls to go into orbit when we strike them (this example is a somewhat augmented form of Hume’s own one), and so forth. It seems that we can do this easily. So we cannot claim to be able to access necessity in the empirical world in this way. We perceive and experience constant conjunction of cause and effect and we may find it fanciful to imagine stepping from a window and gently floating to the ground, but we can do it, and sometimes do so, both deliberately and involuntarily (who has not dreamed they can fly?). But Hume agrees with Descartes that we cannot even dream that two and two make five (if we clearly comprehend those notions in our dream—of course one can have a fuzzy dream in which one accepts the claim that two and two make five, without having the ideas of two, plus, equals and five in clear focus).

Hume’s skepticism about our knowledge of causation leads him to skepticism about the nature of causation: the metaphysical question is given an epistemological treatment, and then the answer returned to the metaphysical question is epistemologically motivated. His conclusion is that, for all we can tell, there is no necessary connection, there is only a series of constant conjunctions, usually called regularities. This does not mean that there is no causal necessity, only that there is no reason to believe that there is. For the Enlightenment project of basing knowledge on reason rather than faith, this is devastating.

The constraint of metaphysical speculation by epistemological considerations remains a central theme of twenty-first century philosophy, even if it has somewhat loosened its hold in this time. But Hume took his critique a step further, with further profound significance for this whole philosophical tradition. He asked what we even mean by “cause”, and specifically, by that component of cause he calls “necessary connection”. (He identifies two others: temporal order and spatiotemporal contiguity. These are also topics of philosophical and indeed physical debate, but are less prominent in early twenty-first century philosophy, and thus are not discussed in this article.) He argues that we cannot even articulate what it would be for an event in the world we experience to make another happen.

The argument reuses familiar material. We have a decent grasp on logical necessity; it is the incoherence of the denial of the necessity in question, which we can easily spot (in his view). But that is not the necessary connection we seek. But, a question remains open, what other kind of necessity could there be? If it does not involve the impossibility of what is necessitated, then in what sense is it necessitated? This is not a rhetorical question; it is a genuine request for explanation. Supplying one is, at best, difficult; at worst, it is impossible. Some have tried (several attempts are discussed throughout the remainder of the article) but most have taken the view that it is impossible. Hume’s own explanation is that necessary connection is nothing more than a feeling, the expectation created in us by endless experience of same cause followed by same effect. Granted, this is a meaning for “necessary connection”; but it is one that robs “necessary” of anything resembling necessity.

The move from “What is X?” to “What does our concept of X mean?” has driven philosophers even harder than the idea that metaphysical speculation must be epistemologically constrained—partly because philosophical knowledge was thought for a long time to be constrained to knowledge of meanings; but that is another story (see Ch 10 of Broadbent, 2016).

This is the background to all subsequent work on causation as rejuvenated by the Anglo-American tradition, and also to the practical questions that arise. The ideas that we cannot directly perceive causation, and that we cannot reason logically from cause to effect, have repeatedly given rise to obstacles in science, law, policy, history, sports, business, politics—more or less any “world-oriented” activity you can think of. The next section summarizes the ways that people have understood this challenge: the most important questions they think it raises and their answers to these questions.

3. A Family Tree of Causal Theories

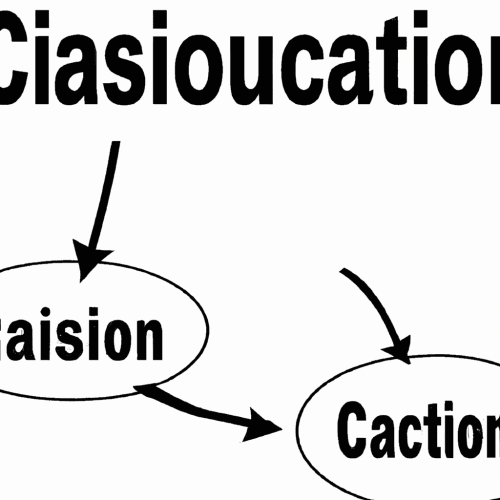

Here is a diagram indicating one possible way of understanding the relationship between different historically significant and still influential theories of, and approaches to, and even understandings of the philosophical problems posed by causation—and indeed some approaches that do not think the problems are philosophical at all.

Figure 1. A “family tree” of theories of causation

At the top level are approaches to causation corresponding to the kinds of questions one might deem important to ask about it. At the second level are theories that have been offered in response to these questions. Some of these theories have sub-theories which do not really merit their own separate level, and are dealt with in this article as variations on a theme (each receiving treatment in its own subsection).

Some of these theories motivate each other, in particular, nominalism and regularity theories often go hand-in-hand. Others are relatively independent, while some are outright incompatible. These compatibility relationships themselves may be disputed.

Two points should be noted regarding this family tree. First, an important topic is absent: the nature of the causal relata. This is because any stance about their nature does not constitute a position about causation on its own; it cuts across this family tree and features importantly in some theories but not in others. While some philosophers have argued that it is very important (Mellor, 1995, 2004; Paul, 2004; Schaffer, 2007), and featured it centrally in their theories of causation (second level on the tree), it does not feature centrally in any approach to causation (top level on the tree), except that insofar as everyone agrees that the causal relata, whatever they are, must be distinct to avoid mixing up causal and constitutive facts. The topic is skipped in this article because, while it is interesting, it is somewhat orthogonal.

The second point to note about this family tree is that others are possible. There are many ways one might understand twenty-first-century work on causation, and thus there are other “family trees” implicit in other works, including other introductions to the topic. One might even think that no such family tree is useful. The one presented above is a tool only one that the reader might find useful, but it should ultimately be treated as itself a topic for debate, dispute, amendment, or rejection.

4. Semantic Analyses

Semantic analyses of causation seek to give the meaning of causal assertions. They typically take “c causes e” to be the exemplary case, where “c” and “e” may be one of a number of things: facts, events, aspects, and so forth. (Here, lower case letters c and e are used to denote some particular cause and effect respectively. Upper case letters C and E refer to classes and yield general causal claims, as in “smoking causes lung cancer”.) Whatever they are, they are universally agreed to be distinct, since otherwise we would wrongly confuse constitutive with causal relations. My T-shirt’s having yellow bananas might end up as a cause of its having yellow shapes on it, for example, which is widely considered to be unacceptable—because it is quite different from my T-shirt’s yellow bananas causing the waitress bringing me my coffee to stare.

The main three positions are regularity theories, probabilistic theories, and counterfactual theories.

a. Regularity Theories

The regularity theory implies that causes and effects are not usually one-off pairs, but recurrent. Not only is the coffee I just drank causing me to perk up, but drinking coffee often has this effect. The regularity view claims that two claims suffice to explain causation: the fact that causes are followed by their effects, plus the fact that cause-effect pairs happen a lot. On the other hand, coincidental pairings do not typically recur. I scratched my nose while drinking the coffee, and this scratching was followed by me perking up. But nose-scratching is not generally followed by perking up. Whereas coffee-drinking is. Coffee-drinking and perking up are part of a regularity; in Hume’s phrase they are constantly conjoined. Which cannot be said about nose-scratching and perking up.

Obviously, the tool needs sharpening. Most of the Cs that we encounter are not always followed by Es, and most of the Es that we encounter are not always (that is, not only) caused by Cs. The assassin shoots (c) the president, who dies (e). But assassins often miss. Moreover, presidents often die of things other than being shot.

David Hume is sometimes presented as offering a regularity theory of causation (usually on the basis of Pt 5 of Hume, 1748), but this is crude at best and downright false at worst (Garrett, 2015). More plausibly, he offered regularities as the most we can hope for in ontology of causation, that is, as the basis of any account of what there might be “in the objects” that most closely corresponds to the various causal notions we have. But his approach to semantics was revisionary; he took “cause” to express a feeling that the experience of regularity produces in us. Knowing whether such regularities continue in the objects beyond our experience requires that we know of some sort of necessary connection sustaining the regularity. And the closest thing to necessary connection that we know about is regularity. We are in a justificatory circle.

It was John Stuart Mill who took Hume’s regularity ontology and turned it into a regularity theory of causation (Mill, 1882). The first thing he did was to address the obvious point that causes and effects are not constantly conjoined, in either direction. He confined the direction of constancy to the cause-effect direction, so that causes are always followed by their effects, but effects need not be necessarily preceded by the same causes. He expanded the definition of “cause” to include the enormousness that suffices for the effect. So, if e is the president’s death, then to say that c caused e is not to say that Es are always preceded by Cs, but rather that Cs are always followed by Es. Moreover, when we speak of the president’s being shot as the cause, we are being casual and strictly inaccurate. Strictly speaking, c is not the cause, but c*, being the entirety of things that were in place, including the shot, such that this entirety of things is sufficient for the president’s death. Strictly speaking, c* is the cause of e. There is no mysterious necessitation invoked because “sufficient” here just means “is always followed by”. When the wind is as it was, and the president is where he was, and the assassin aims so, and the gun fires thus, and so on and so forth, the president always dies, in all situations of this kind.

Mill thought that exceptionless regularities could be achieved in this way. In fact, he believed that the “law of causality”, being the exceptionless regularity between causes (properly defined) and effects, was the only true law (Mill, 1882). All the laws of science, he believed, had exceptions: objects falling in air do not fall as Newton’s laws of motion say they should, for example (this example is not Mill’s own). But objects released just so, at just such a temperature and pressure, with just such a mass, shape and surface texture, always fall in this way. Thus, according to Mill, the law of causality was the fundamental scientific law.

This theory faces a number of objections, even setting aside the lofty claims about the status of the “law of causality”. The subsubsections below discuss four of them.

i. The Problem of Implausibly Enormous Cases

To be truly sufficient for an effect, a cause must be enormous. It must include everything that, if on another occasion it is different, yields an overall condition that is followed by a different effect. It is questionable that “cause” is reasonably understood as referring to such an enormousness.

Enormousness poses problems for more than just the analysis of the common meaning of “cause”. It also makes it unclear how we can arrive at and use knowledge of causes. These are such gigantic things that they are bound to be practically unknowable to us. What makes our merry inference from a shot by an ace assassin who has never yet missed to the imminent death of the president is not the fact that the assassin has never yet missed, since this constancy is incidental; the causal regularity is between huge preceding conditions. In the previous cases in this section where the assassin shot, these may well not have been at all the same.

It is not clear that such objections are compelling, however. The idea of Mill’s account concerns the nature of causation and not our knowledge of it, much less our casual inferences, which might well depend on highly contingent and local regularities, which might be underwritten by truly causal ones without instantiating them. Mill himself provides a lengthy discussion of the use of causal language to pick out one part of the whole cause. As for getting the details right, Mill’s central idea seems to admit of other implementations, and an advocate would want to try these.

There was a literature in the early-to-middle twentieth century trying, in effect, to mend Mill’s account so as to get the blend of necessity and sufficiency just right for correctly characterizing the semantics of “cause”, against a background assumption that Millian regularity was the ultimate ontological truth about causation. This literature took its final forms in Jonathan Mackie’s INUS analysis (Mackie, 1974).

Mackie offered more than one account of causation. His INUS analysis was an account of causation “in the objects”, that is, an account in the Humean spirit of offering the closest possible objective characterization of what we appear to mean by causal judgements, without necessarily supposing that causal judgements are ultimately meaningful or that they ultimately refer to anything objective.

Mackie’s view was that a cause was an insufficient but necessary part of an unnecessary but sufficient condition for the effect. Bear in mind that “necessary” and “sufficient” are to be understood materially, non-modally, as expressing regularities: “x is necessary for y” means “y is always accompanied (or in the causal case, preceded) by y” and “x is sufficient for y” means “x is always accompanied (or in the causal case, followed) by y”. If we set aside temporal order, necessity and sufficiency are thus inter-definable; for x to be sufficient for y is for y to be necessary for x, and vice versa.

Considering our assassin, how does his shot count as a cause, according to the INUS account?

Take the I of INUS first. The assassin’s shot was clearly Insufficient for the president’s death. The president might suddenly have dipped his head to bestow a medal on a citizen (Forsyth, 1971). All sorts of things can and do intervene on such occasions. Shots of this nature are not universally followed by deaths. c is Insufficient for e.

Second, take the N. The shot is clearly Necessary in some sense for the death. In that situation, without the shot, there would have been no death. In strict regularity-talk, such situations are not followed by deaths in the absence of a shot. At the same time, we can hardly say that shots are required for presidents to die; most presidents find other ways to discharge this mortal duty. Mackie explains this limited necessity by saying not that c is Necessary for e, but that c is a Necessary part of a larger condition that preceded e.

Moving to the U, this larger condition is Unnecessary for the effect. There are plenty of presidential deaths caused by things other than shots, as just discussed; this was the reason we saw for not saying that the shot is necessary for the death. c is an Insufficient part but Necessary part of an Unnecessary condition for e.

Finally, the S. The condition of which c is an unnecessary part (so far as the occurrence of e is concerned), but it is sufficient. e happens—and it is no coincidence that it does. In strict regularity talk, every such condition is followed by an E. There is no way for an assassin to shoot just so, in just those conditions, which include the non-ducking of the president, his lack of a bullet proof vest, and so forth, and for the president not to die. Thus c is an Insufficient but Necessary part of an Unnecessary but Sufficient condition for e. To state it explicitly:

c is a cause of e if and only if c is a necessary but insufficient part of an unnecessary but sufficient condition for e.

In essence, Mackie borrows Mill’s “whole cause” idea, but drops the implausible idea that “cause” strictly refers to the “whole cause”. Instead, he makes “cause” refer to a part of the whole cause, one that satisfies the special conditions.

As well as addressing the problem of enormousness, which is fundamentally a plausibility objection, Mackie intends his INUS account to address the further and probably more pressing objections which follow.

ii. The Problem of the Common Cause

An immediate problem for any regularity account of causation is that, just as effects have many causes, causes also have many effects, and these effects may accompany each other very regularly. Recall Mill’s clarification that effects need not be constantly preceded by the same causes, and that “constant conjunction” was in this sense directional: same causes are followed by same effects, but not vice versa. This is strongly intuitive—as the saying goes, there is more than one way to skin a cat. Essentially, Mill tells us that we do not have to worry that effects are not always preceded by the same causes.

However, we are still left in a predicament, even with this unidirectional constant conjunction of same-cause-same-effect. When combined with the fact that a single cause always has multiple effects, we seem to land up with the result that constant conjunctions will also obtain between these effects. Cs are always followed by E1s, and Cs are always followed by E2s. So, whenever there is a C, we have an E1 and an E2, meaning that whenever we have an E1, we have an E2, and vice versa.

How does a regularity theory get out of this without dropping the fundamental analytical tool it uses to distinguish cause from coincidence, the unfailing succession of same effect on same cause, knowing that the singular “effect” should actually be substituted with the plural “effects”?

Here is an example of the sort of problem for naïve regularity theories that Mackie’s account is supposed to solve. My alarm sounds, and I get out of bed. Shortly afterwards, our young baby starts to scream. This happens daily: the alarm wakes me up, and I get out of bed; but it also wakes the baby up. I know that it is not my getting out of bed that causes the baby to scream. How? Because I get out of bed in the night at various other times, and the baby does not wake up on those occasions; because my climbing out of bed is too quiet for a baby in another room to hear; and for other such reasons. Also, even when I sleep through the alarm (or try to), the baby wakes up. But what if the connections were as invariable as each other—there were no (or equally many) exceptions?

Consider this classic example. The air pressure drops, and my barometer’s needle indicates that there will be a storm. There is a storm. My barometer’s needle dropping obviously does not cause the storm. But, as a reliable storm-predictor, it is followed by a storm regularly—that is the whole point of barometers.

Mackie’s INUS theory supplies the following answer. The barometer’s falling is not an INUS condition for the storm’s occurrence, because situations that are exactly similar except for the absence of a barometer can and do occur. The falling of the barometer may be a part of a sufficient condition for the storm to occur, but it is not a necessary part of that condition. Storms happen even when no barometer is there to predict them. (Likewise, the storm is not an INUS condition for the barometer falling, in case that is a worry despite the temporal order, because barometers can be induced to fall in vacuum chambers.)

Thus the intuition I have in the alarm/baby case is the correct one; the regularity between alarm and baby waking is persistent regardless of my getting out of bed, and that between my getting out of bed and the baby waking fails in otherwise similar occasions where there is no alarm.

However, this all depends on a weakening of the initial idea behind the regularity theory, since it amounts to accepting that there are many cases of apparent causation without underlying regularity, which are therefore true, not in virtue of match-strikes being followed by flames, but for a more complicated reason, albeit one that makes use of the notion of regularity. Hume’s idea that we observe like causes followed by like effects suffers a blow, and together with it, the epistemological motivation of the regularity theory, as well as its theoretical elegance. It is to this extent a concession on the part of the regularity theory. There are other cases where we do want to say that c causes e even though Cs are not always followed by Es.

In fact, such is the majority of cases. Striking the match causes it to light even though many match-strikes fail to produce a spark, breaking the match, and so forth. There are similar scenarios in which the match is struck but there is no flame; yet the apparent conclusion that the match strike does not cause the flame cannot be accepted. Perhaps we must insist that the scenarios differ because the match is not struck exactly so, but now we are not analyzing the meaning of “striking the match caused it to light”, since we are substituting an unknown and complicated event for “striking the match”, for the sake of insisting that causes are always followed by their effects—which is a failing of the analytical tool.

Common cause situations thus present prima facie difficulties for the regularity account. Mackie’s account may solve the problem; nonetheless, if there were an account of causation that did not face the problem in the first place, or that dealt with the problem with less cost to the guiding idea of the regularity approach and with less complication, it would be even more attractive. This is one of the primary advantages claimed by the two major alternatives, counterfactual and probabilistic accounts, which are discussed in their two appropriate subsections below.

iii. The Problem of Overdetermination

As noted in the subsubsection on the problem of the common cause, many effects can be caused in more than one way. A president may be assassinated with a bullet or a poison. The regularity theory can deal with this easily by confining the relevant kind of regularity to one direction. In Mackie’s account, causes are not sufficient for their effects, which may occur in other ways. But the problem has another form. If an effect may occur in more than one way, what is to stop more than one of these ways from being present at the same time? Assassin 1 shoots the president, but Assassin 2’s on-target bullet would have done the job if Assassin 1 had missed. c causes e, but c’ would have caused e otherwise.

Such situations are referred to by various names. This article uses the term redundancy as a catch-all for any situation like this, in which a cause is “redundant” in the sense that the effect would have occurred without the actual cause. (Strictly, all that is required is that the cause might have occurred, because the negation of “would not” is “might” (Lewis, 1973b).) Within redundancy, we can distinguish symmetric from asymmetric overdetermination. Symmetric overdetermination occurs when two causes appear absolutely on a par. Suppose two assassins shoot at just the same time, and both bullets enter the president’s heart at just the same time. Either would have sufficed, but in the event, both were present. Neither is “more causal”. The example is not contrived. Such situations are quite common. You and I both shout “Look out!” to the pedestrian about to step in front of a car, and both our shouts are loud enough to cause the pedestrian to jump back. And so forth.

In asymmetric overdetermination, one of the events is naturally regarded as the cause, while the other is not, but both are sufficient in the circumstances for the effect. One is a back-up, which would have caused the effect had the actual cause not done so. For example, suppose that Assassin 2 had fired a little later than Assassin 1, and that the president was already dead by the time Assassin 2’s bullet arrived. Assassin 2’s shot did not kill the president, but had Assassin 1 not shot (or had he not shot accurately enough), Assassin 2’s shot would still have killed the president. Such cases are more commonly referred to as preemption, which is the terminology used in this article since it is more descriptive: the first cause preempts the second one. Again, preemption examples need not be contrived or far-fetched. Suppose I shout “Look out!” a moment after you, but still soon enough for the pedestrian to step back. Your shout caused the pedestrian to step back, but had you not shouted, my shout would have caused the pedestrian to step back. There is nothing outlandish about this; such things happen all the time.

The difficulty here is that there should be two INUS conditions where there is one. Assassin 1’s shot is a necessary part of a sufficient condition for the effect. But so is Assassin 2’s shot. However, Assassin 1’s shot is the true cause.

In the symmetric overdetermination case, one may take the view that they are both equally causes of the effect in question. However, there is still the preemption case, where Assassin 1 did the killing and not Assassin 2. (If you doubt this, imagine they are a pair of competitive twins, counting their kills, and thus racing to be first to the president in this case; Assassin 1 would definitely insist on chalking this one up as a win).

Causal redundancy has remained a thorn in the side of all mainstream analyses of causation, including the counterfactual account (see the appropriate subsection). What makes it so troubling is that we use this feature of causation all the time. Just as we exploit the fact that causes have multiple effects when we are devising measuring instruments, we exploit the fact that we can bring a desired effect about in more than one way every time we set up a failsafe mechanism, a Plan B, a second line of defense, and so forth. Causal redundancy is no mere philosopher’s riddle: it is a useful part of our pragmatic reasoning. Accounting for the fact that we use “cause” in situations where there is also a redundant would-be cause thus seems central to explicating “cause” at all.

iv. The Problem of Unrepeatability

This is less discussed than the problems of the common cause and overdetermination, but it is a serious problem for any regularity account. The problem was elegantly formulated by Bertrand Russell, who pointed out that, once a cause is specified so fully that its effect is inevitable, it is at best implausible and perhaps (physically) impossible that the whole cause occur more than once (Russell, 1918). The fundamental idea of the regularity approach is that cause-effect pairs instantiate regularities in a way that coincidences do not. This objection tells against this fundamental idea. It is not clear what the regularity theorist can reply. She might weaken the idea of regularity to admit of exceptions, but then the door is open to coincidences, since my nose-scratching just before the president’s death might be absent on another such occasion, and yet this might no longer count against its candidacy for cause. At any rate, the problem is a real one, casting doubt on the entire project of analyzing causation in terms of regularity.

We might respond by substituting a weaker notion than true sufficiency: something like “normally followed by”. Nose-scratchings are not normally followed by presidents’ deaths. However, this is not a great solution for regularity theories, because (a) the weaker notion of sufficiency is a departure from the sort of clarity that regularity theorists would otherwise celebrate, and (b) a similar battery of objections will apply: we can find events that, by coincidence, are normally followed by others, merely by chance. Indeed, if enough things happen, so that there are enough events, we can be very confident of finding at least some such patterns of events.

b. Counterfactual Theories

Mackie developed a counterfactual theory of the concept of causation, alongside his theory of causation in objects as regularity. However, at almost exactly the same time, a philosopher at the other end of his career (near the start) developed a theory sharing deep convictions about the fundamental nature of regularities, the priority of singular causal judgements, and the use of counterfactuals to supply their semantics, and yet setting the study of causation on an entirely new path. This approach dominated the philosophical landscape for nearly half a century since the time of writing, not only as a prominent theory of causation, but as an outstanding piece of philosophical work, and thus served as an exemplar for analytic metaphysicians, as a central part of the 1970s story of the emboldening of analytic metaphysics, following years in exile while positivism reigned.

David Lewis’s counterfactual theory of causation (Lewis, 1973a) starts with the observation that, commonly, if the cause had not happened, the effect would not have happened. To build a theory from this observation, Lewis had three major tasks. First, he had to explain what “would” means in this context; he had to provide a semantics for counterfactuals. Second, he had to deal with cases where counterfactuals appear to be true without causation being present, so that counterfactual dependence appears not to be sufficient for causation (since if it were, a lot of non-causes would be counted as causes). Third, he had to deal with cases where it appears that, if the cause had not happened, the effect would still have happened anyway: cases of causal redundancy, where counterfactual dependence appears not to be necessary for causation.

For a considerable period of time, the consensus was that Lewis had succeeded with the first two tasks but failed the third. In the early years of the twenty-first century, however, the second task—establishing that counterfactuals are sufficient for causation—also received critical scrutiny.

Lewis’s theory of causation does not state that effect counterfactually depends on cause, but rather, that c causes e if and only if there is a causal chain running from c to e whose links consist in a chain of counterfactual dependence. The reason for the use of chains is explained by the need to respond to the problem of preemption, as explained in the subsection covering the problem of causal redundancy. Counterfactual dependence is thus not a necessary condition for causation. However, it is a sufficient condition, since whenever we do find counterfactual dependence (of the “right sort”), we find causation. On his view, counterfactual dependence is thus sufficient but not necessary for causation; what is necessary is a chain of counterfactual dependence, but not necessarily the overarching dependence of effect on cause.

The best way to understand Lewis’s theory is through his responses to problems (as he himself sets it out). This is the approach taken in the remainder of this subsection.

i. The Problems of Common Cause, Enormousness and Unrepeatability

Lewis takes his theory to be able to deal easily with the problem of the common cause, which he parcels with another problem he calls the problem of effects. This is the problem that causes might be thought to counterfactually depend on their effects as well as the other way around. Not so, says Lewis, because counterfactual dependence is almost always forward-tracking (Lewis, 1973a, 1973b, 1979). The cases where it is not are easily identifiable, and these occurrences of counterfactual dependence are not apt for analyzing causation, just as counterfactuals representing constitutive relations (such as “If I were not typing, I would not be typing fast”) are not apt.

Lewis’s argument for the ban on backtracking is as follows. Suppose a spark causes a fire. We can imagine a situation where, with a small antecedent change, the fire does not occur. This change may involve breaking a law of nature (Lewis calls such changes “miracles”) but after that, the world may roll on exactly as it would under our laws (Lewis, 1979). This world is therefore very similar to ours, differing in one minor respect.

Now consider what we mean when we start a sentence with “If the fire had not occurred…” By saying so, we do not mean that the spark would not have occurred either. For otherwise, we would also have to suppose that the wire was never exposed, and thus that the careless slicing of a workman’s knife did not occur, and therefore that the workman was more conscientious, perhaps because his upbringing was different, and that of his parents before him, and…? Lewis says: that cannot be. When we assert a counterfactual, we do not mean anything like that at all. Rather, we mean that the spark still occurred, along with most other earlier events; but for some or other reason, the fire did not.

Why this is so is a matter of considerable debate, and much further work by Lewis himself. For these purposes, however, all that is needed is the idea that, by the time when the fire occurs, the spark is part of history, and there will be some other way to stop the fire—some other small “miracle”—that occurs later, and thus preserves a larger degree of historical match with the actual world, rendering it more similar.

The problem of the common cause is then solved by way of a simple parallel. It might appear that there is counterfactual dependence between the effects of a common cause: between barometer falling and storm, for example. Not so. If the barometer had not fallen, the air pressure, which fell earlier, would remain fallen; and the storm would have occurred anyway. If the barometer had not fallen, that would be because some tiny little “miracle” would have occurred shortly beforehand (even Lewis’s account requires at least this tiny bit of backtracking, and he is open about that.) This would lead to its not falling when it should. In a nutshell, if the barometer had not fallen, it would have been broken.

Put that way, the position does not sound so attractive; on the contrary, it sounds somewhat artificial. Indeed, this argument, and Lewis’s theory of causation as a whole, depend heavily on a semantics for counterfactuals according to which the closest world at which the antecedent is true determines the truth of the counterfactual. If the consequent is true at that world, the counterfactual is true; otherwise, not. (Where the antecedent is false, we have vacuous truth.) This semantics is complex and subject to many criticisms, but it is also an enormous intellectual achievement, partly because a theory of causation drops out of it virtually for free, or so it appears when the package is assembled. There is no space here to discuss the details of Lewis’s theory of counterfactuals (for critical discussions see in particular: Bennett, 2001, 2003; Elga, 2000; Hiddleston, 2005), but if we accept that theory, then his solution to the problem of effects follows easily.

Lewis deals even more easily with the problems of enormousness and unrepeatability that trouble regularity theories. The problem of enormousness is that, to ensure a truly exceptionless regularity, we must include a very large portion of the universe indeed into the cause (Mill’s doctrine of the “whole cause”). According to Mill, strictly speaking, this is what “cause” means. But according to common usage, it most certainly is not what “cause” means: when I say that the glass of juice quenched my thirst, I am not talking about the Jupiter, the Andromeda galaxy, and all the other heavenly bodies exerting forces on the glass, the balance of which was part of the story of the glass raising to my lips. I am talking about a glass of juice.

The counterfactual theory deals with this easily. If I had not drunk the juice, my thirst would not have been quenched. This is what it means to say that drinking the juice caused my thirst to be quenched; which is what I mean when I say that it quenched my thirst. There is no enormousness. There are many other causes, because there are many other things that, had they not been so, would have resulted in my thirst not being quenched. But, Lewis says, a multiplicity of causes is no problem; we may have all sorts of pragmatic reasons for singling some out rather than others, but these do not have implications for the underlying concept of cause, nor indeed for the underlying causal facts.

The problem of unrepeatability was that, once inflated to the enormous scale of a whole cause, it becomes incredible that such things recur at all, let alone regularly. Again, there is no problem here: ordinary events like the drinking of juice can easily recur.

While later subsubsections discuss the problems that have proved less tractable for counterfactual theories, we should firstly note that even if we set aside criticisms of Lewis’s theory of counterfactuals, his solution to the problem of the common cause is far less plausible on its own terms than Lewis and his commentators appear to have appreciated. It is at least reasonable to suggest that we use barometers precisely because they track the truth of what they predict (Lipton, 2000). It does not seem wild to think that if the barometer had not fallen, the storm would not after all have been going to occur: more naturally, the storm would not after all have been impending. Lewis’s theory implies that in the nearest worlds where the barometer does not fall, my picnic plans would have been rained out. If I believed that, I would immediately seek a better barometer.

Empirical evidence suggests that there is a strong tendency for this kind of reasoning in situations where causes and their multiple effects are suitably tightly connected (Rips, 2010). Consider a mechanic wondering why the car will not start. He tests the lights which also do not work. So he infers that it is probably the battery. It is. But in Lewis’s closest world where the lights do work, the battery is still flat: an outrageous suggestion for both the mechanic and any reasonable similarity-based semantics of counterfactuals (for another instance of this objection see Hausman, 1998). Or, if not, then he must accept that the lights’ not lighting causes the car not to start (or vice versa). Philosophers are not usually very practical and sometimes this shows up; perhaps causation is a particularly high-risk area in this regard, given its practical utility.

ii. The Problem of Causal Redundancy

If Assassin 1 had not shot (or had missed) then the president still would (or might) have died, because Assassin 2 also shot. Recall that two important kinds of redundancy can be distinguished (as discussed in the subsubsection on the problem of the common cause). One is symmetric overdetermination, where the two bullets enter the heart at the same time. Lewis says that in this case our causal intuitions are pretty hazy (Lewis, 1986). That seems right; imagine a firing squad—what would we say about the status of Soldier 1’s bullet, Soldier 2’s bullet, Soldier 3’s, … when they are all causally sufficient but none of them causally necessary? We would probably want to say that it was the whole firing squad that was the cause of the convict’s death. So we should in those residual overdetermination cases that cannot be dealt with in other ways, says Lewis. Assassin 1 and Assassin 2 are together the cause. The causal event is the conjunction of these two events. Had that event not occurred, the effect would not have occurred. Lewis runs into some trouble with the point that the negation of a conjunction is achieved by negating just one of its conjuncts, and thus Assassin 1’s not shooting is enough to render the conjunctive event absent—even if Assassin 2 had still shot and the president would still have died. Lewis says that we have to remove the whole event when we are assessing the relevant counterfactuals.

This starts to look less than elegant; it lacks the conviction and sense of insight that characterize Lewis’s bolder propositions. However, our causal intuitions are so unclear that we should take the attitude that spoils go to the victor (meaning, the account that has solved all the cases where our intuitions are clear). Even if this solution to symmetric overdetermination is imperfect, which Lewis does not admit, the unclarity of our intuitions would mean that there is no basis to contest the account that is victorious in other areas.

Preemption is the other central kind of causal redundancy, and it has proved a persistent problem for counterfactual approaches to causation. It cannot be set aside as a “funny case” in the way of symmetric overdetermination, because we do have clear ideas about the relevant causal facts, but they do not play nicely with counterfactual analysis. Assassins 1 and 2 may be having a competition as to who can chalk up more “kills”, in which case they will be deeply committed to the truth of the claim that the preempting bullet really did cause the death, despite the subsequent thudding of the loser’s bullet into the presidential corpse. A second later or a day later—it would not matter from their perspective.

Lewis’s attempted solution to the problem of preemption seeks, once again, to apply features of his semantics for counterfactuals. The two features applied are non-transitivity and, once again, non-backtracking.

Counterfactuals are unlike indicative conditionals in not being transitive (Lewis, 1973b, 1973c). For indicatives, the pattern If A then B, if B then C, therefore if A then C is valid. But not so for counterfactuals. If Bill had not gone to Cambridge (B), he would have gone to Oxford (C); and if Bill had been a chimpanzee (A), he would not have gone to Cambridge (B). If counterfactuals are transitive, then it can be concluded that, if Bill had been a chimpanzee (A), he would have gone to Oxford (C). Notwithstanding its prima facie appeal, this argument has not been found compelling, and the moral usually drawn is that transitivity fails for counterfactuals.

Lewis thus suggests that causation consists in a chain of counterfactual dependence, rather than a single counterfactual. Suppose we have a cause c and an effect e, connected by a chain of intermediate events d1, … dn. Lewis says: it can be false that if c had not occurred then e would not have occurred, yet true that c causes e, provided that there are events d1, … dn such that if c had not occurred then d1 would not have occurred, and… if dn (henceforth dn is simply called d for readability) had not occurred, then e would not have occurred.

This is one step of the solution, because it provides for the effect to fail to counterfactually depend upon Assassin 1’s shot, yet Assassin 1’s shot to still be the cause. Provides for, but does not establish. The obvious remaining task is to establish that there is a chain of true counterfactuals from Assassin 1’s shot to the president’s death—and, if there is, that there is not also a chain from Assassin 2’s shot.

This is where the second deployment of a resource from Lewis’s semantics for counterfactuals comes into play (and this is sometimes omitted from explanations of how Lewis’s solution to preemption is supposed to work). His idea is that, at the time of the final event in the actual causal chain, d, the would-be causal chain has already been terminated, thanks to something in the actual causal chain. d* has already failed to happen, so to speak: its time has passed. So “~d → ~e” is true, because d* would not occur in the absence of d. ~d-worlds where d* occurs are further than some worlds where ~d* as in actuality.

This solution may work for some cases; these have become known as early preemption cases. But it does not work for others, and these have become known as late preemption. Consider the moment when Assassin 1’s bullet strikes the president, and suppose that this is the last event, d, in the causal chain from Assassin 1’s shot c to the president’s death e. Then ask what would have happened if this event had not happened—by a small miracle the bullet deviated at the last moment, for example. At that moment, Assassin 2’s bullet was speeding on its lethal path towards the president. On Lewis’s view, after the small miracle by which Assassin 1’s bullet does not strike (after ~d), the world continues to evolve as if the actual laws of nature held. So Assassin 2’s bullet strikes the president a moment later, killing him.

Various solutions have been tried. We might specify the president’s death very precisely, as the death that occurred just then, a moment earlier than the death that would have occurred had Assassin 2’s bullet struck; and the angle of the bullet would have been a bit different; and so forth. In short: that death would not have occurred, but for Assassin 1, even if some other, similar death, involving the same person and a similar cause, would have occurred in its place. But Lewis himself provides a compelling response, which is simply that this is not at all what phrases like “the president died” or “the death of the president” refer to when we use them in a causal statement. Events may be more or less tightly specified, and there can be a distinction drawn between actual and counterfactual deaths, tightly specified. But that is not the tightness of specification we actually use in this causal judgement, as in many others.

A related idea is to accept that the event of the president’s death is the same in the actual and counterfactual cases, but to appeal to small differences in the actual effect that would have happened if the actual cause had been a bit different. Therefore, in very close worlds, where Assassin 1 shot just a moment earlier or later, but still soon enough to beat Assassin 2, or where a fly in the bullet’s path had caused just a miniscule deviation, or similar ones, the death would have been just minutely different. It still counts as the same death-event, but with just slightly different properties. Influence is what Lewis calls this counterfactual co-variance of event properties, and he suggests that a chain of influence connects cause and effect, but not preempted cause and effect (Lewis, 2004a).

However, there even seem to be cases where influence fails, notably the trumping cases pressed in particular by Jonathan Schaffer (2004). Merlin casts a spell to turn the prince into a frog at the stroke of midnight. Morgana casts the same spell, but at a later point in the day. It is the way of magic, suppose, that the first spell cast is the one that operates; had Morgana cast a spell to turn the prince into a toad instead, the prince would nevertheless have turned into a frog, because Merlin’s earlier spell takes priority. Yet she in fact specified a frog. If Merlin had not cast his spell, the prince would still have turned into a frog—and there would have been no difference at all in the effect. There is no chain of influence.

We do not have to appeal to magic for such instances. I push a button to call an elevator, which duly illuminates, but even so, an impatient or unobservant person arriving directly after me pushes it again. The elevator arrives. It does so in just the same way and in just the same time as if I had not pushed it, or had pushed it just a tiny moment earlier or later, more or less forcefully, and so forth. In today’s world, where magic is rarely observed, electrical mediation of cause and effects is a fruitful hunting ground for cases of trumping.

There is a large literature on preemption, because the generally accepted conclusion is that, despite Lewis’s extraordinary ingenuity, the counterfactual analysis of causation cannot be completed. Many philosophers are still attracted to a counterfactual approach: indeed it is an active area of research outside philosophy (as in interdisciplinary work), offering as it does a framework for technical development and thus for operationalization in the business of inferring causes. But for analyzing causation—for providing a semantic analysis, for saying what “causation” means—there is general acceptance that some further resource is needed. Counterfactuals are clearly related to causation in a tight way, but the nature of that connection still appears frustratingly elusive.

iii. A New Problem: Causal Transitivity

Considerably more could be said about counterfactual analysis of causation; it dominated philosophical attention for decades, and drew more attention than any other approach after superseding the regularity theories in the 1970s. Since discussions of preemption dried up, attention has shifted to the less controversial claim that counterfactual dependence is sufficient for causation. One is briefly introduced here: transitivity.

In Lewis’s account, and more broadly, causation is often supposed to be transitive, even though counterfactual dependence is not. This is central to Lewis’s response to the problem of preemption. It also seems to tie with the “non-discriminatory” notion of cause, according to which my grandmother’s birth is among the causes, strictly speaking, of my writing these words, even if we rarely mention it.

To say that a relation R is transitive is to say that if R(x,y) and R(y,z) then R(x,z). There seem to be cases showing that causation is not like this after all. Hiker sees a boulder bounding down the slope towards him, ducks, and survives. Had the boulder not bounded, he would not have ducked, and had he not ducked, he would have died. There is a chain of counterfactual dependence, and indeed a chain of causation. But there is not an overarching causal relation. The bounding boulder did not cause Hiker’s survival.

Cases of this kind, known as double prevention, have provoked various solutions, not all of which involve an attempt to “fix” the Lewisian approach. Ned Hall suggests that there are two concepts of causation, which conflict in cases like this (Hall, 2004). Alex Broadbent suggests that permitting backtracking counterfactuals in limited contexts permits introducing as a necessary condition on causation the dependence of cause on effect, which cases of this kind fail (Broadbent, 2012). But their significance remains unclear.

c. Interventionism

There is a very wide range of other approaches to the analysis of causation, given the apparent dead ends that the big ideas of regularity and counterfactual dependence have reached. Some develop the idea of counterfactual dependence, but shift the approach from conceptual analysis to something less purely conceptual, more closely related to causal reasoning, in everyday and scientific contexts, and perhaps more focused on investigating and understanding causation than producing a neat and complete theory. Interventionism is the most well-known of these approaches.

Interventionism starts with the idea that causation is fundamentally connected to agency: to the fact that we are agents who make decisions and do things in order to bring about the goals we have decided upon. We intervene in the world in order to make things happen. James Woodward sets out to remove the anthropocentric component of this observation, to devise a characterization of interventions in broadly speaking objective terms, and to use this as the basis for an account of how causal reasoning works—meaning, it manages to track how the world works, and thus enables us to make things happen (Woodward, 2003, 2006).

Woodward’s interests are thus focused on causal explanation in particular, trying to answer the questions of what causal explanations amount to, what information they carry, what they mean. The notion of explanation he arrived at is analyzed and unpacked in detail. The target of analysis shifts from “c causes e”, not merely to “c explains e” (which was the target of much previous work in the philosophy of explanation), but to a full paragraph of explanation of why and how the temperature in a container increases when the volume is reduced in terms of the ideal gas law and the kinetic theory of heat.

Interventionism offers a different approach to thinking about causation, and perhaps the most difficult thing for someone approaching it from the perspective of the Western philosophical canon is to work out what exactly it achieves, or aims to achieve. It does not tell us precisely what causation itself is. It may help us understand causation; but if it does, the upshot does not fall short of being problematic—being a series of interesting observations, akin to those of J. L. Austin and the ordinary language philosophers, or an operationalizable concept of causation, one that might be converted into a fully automatic causal reasoning “module” to be implemented in a robot. The latter appears to be the goal of some in the non-philosophical world, such as Judea Pearl. Such efforts are ambitious and interesting, potentially illuminating the nature of causal inference, even if this potential is yet to be fully realized, and even if a question of significance so long as implementation remains hard to conceive.

Perhaps what interventionist frameworks offer is a language for talking about causation more precisely. So it is with Pearl, who is also a kind of interventionist, holding that causal facts can be formally represented in diagrams called Directed Acyclic Graphs displaying counterfactual dependencies between variables (Pearl, 2009; Pearl & Mackenzie, 2018). These counterfactual dependencies are assessed against what would happen if three was an intervention, a “surgical”, hypothetical one, to alter the value of only a (or some) specified variable(s). Formulating causal hypotheses in this way is meant to offer mathematical tools for analyzing empirical data, and such tools have indeed been developed by some, notably in epidemiology. In epidemiology, the Potential Outcomes Approach, which is a form of interventionism and a relative of Woodward’s philosophical account, attracts a devoted following. The primary insistence of its followers is on the precise formulation of causal hypotheses using the language of interventions (Hernán, 2005, 2016; Hernán & Taubman, 2008), which is a little ironic, given that a basis for Woodward’s philosophical interventionism was the idea of moving away from the task of strictly defining causation. The Potential Outcomes Approach constitutes a topic of intense debate in epidemiology (Blakely, 2016; Broadbent, 2019; Broadbent, Vandenbroucke, & Pearce, 2016; Krieger & Davey Smith, 2016; Vandenbroucke, Broadbent, & Pearce, 2016; VanderWeele, 2016), and its track record of actual discoveries remains limited; its main successes have been in re-analyzing old data which was wrongly interpreted at the time, but where the mistake is either already known or no longer matters.

If this sounds confusing, that is because it is. This is a very vibrant area of research. Those interested in interventionism are strongly advised not to confine themselves to the philosophical literature but to read at least a little of Judea Pearl’s (albeit voluminous) corpus, and engage with the epidemiological debate on the Potential Outcomes Approach. Even if it is yet to see its most concise and conceptually organized formulation on which work is ongoing, the initial lack of organization of a field of study is indicative of its ongoing development—exactly the kind of field one who is looking to make a mark, or at least a contribution, should take an interest in. Once the battle lines are drawn up, and the trenches are dug, the purpose of the entire war is called into question.

d. Probabilistic Theories

Probabilistic theories (for example: Eells, 1991; Salmon, 1993; Suppes, 1970) start with the idea that causes raise the probability of their effects. Striking a match may not always be followed by its lighting, but certainly makes it more likely; whereas coincidental antecedents, such as my scratching my nose, do not.

Probabilistic theories originate in part as an attempt to soften the excesses of regularity theories, given the absence of observable exceptionless regularities. More importantly, however, they are motivated by the observation that the world itself may be fundamentally indeterministic, if quantum physics withstands the test of time. A probabilistic theory could cope with a deterministic world as well as an indeterministic one, but a regularity theory could not. Moreover, given the shift in odds towards an indeterministic universe, the fights about regularity start to look scholastic, concerning the finer details of a superstructure whose foundations, never before critically examined, have crumbled upon exposure to the fresh air of empirical science.

Probabilistic approaches may be combined with other accounts, such as agency approaches (Price, 1991). Alternatively, probability may be taken as the primary analytical tool, and this approach has given rise to its own literature on probabilistic theories.

The first move of a probabilistic theory is to deal with the problem that effects raise the probability of other effects of a shared cause. To do so, the notion of screening off is introduced (Suppes, 1970). A cause has many effects, and conditionalizing on the cause alters their probabilities even if we hold the other effects fixed. But not so if we conditionalize on an effect. The probability of the storm occurring, given that the air pressure does not fall, is lower than the probability given that the air pressure does fall, even if we hold fixed the falling of the barometer; and vice versa. But if we hold fixed the air pressure falling (at, say 1 atmosphere, as in actuality) while conditionalizing on the barometer, we do not see any difference in the probability of the storm in case the barometer falls than in case it does not.

To unpack this a bit, consider all the occasions on which air pressure has fallen, all those on which barometers have fallen, and all those on which storms have occurred (and barometers have been present). The problem could then be stated like this. When air pressure falls, storm occurrences are very much more common than when it does not. Moreover, storm occurrences are very much more common in cases where barometers have fallen than in cases where they (have been present but) have not. Thus it appears that both air pressure and barometers cause storms. But, a question prompts, do they truly do so? Or is this one a case of spurious causation?

The screening-off solution says you should proceed as follows. First, consider how things look when you hold the barometer status fixed. In cases where the barometer does not fall, but air pressure does, storm occurrences are more frequent than in cases where neither the barometer falls nor does air pressure. Likewise in cases where barometers do fall. Now hold fixed air pressure status, considering first those cases where air pressure does not fall, but barometers do—storms are not more common there. Among cases where air pressure does fall, storms are not more common in cases where barometers do fall than in cases where they do not.

Thus, air pressure screens off the barometer falling from the storm. Once you settle on the behavior of the air pressure, and look only at cases where the air pressure behaves in a certain way, the behavior of the barometer is irrelevant to how commonly you find storms. On the other hand, if you settle on a certain barometer behavior, the status of the air pressure remains relevant to how commonly you encounter storms.

This asymmetry determines the direction of causation. Effects raise the probability of their causes, and indeed of other effects—that is why we can perform causal inference, and can infer the impending storm from the falling barometer. But causes “screen off” their effects from each other, while effects do not: the probability of the storm stops tracking the behavior of the barometer as soon as we fix the air pressure, which screens the storm from the barometer; whereas the probability of the storm continues to track the air pressure even when we fix the barometer (and likewise for the barometer when we fix the storm).

One major source of doubt about probabilistic theories is simply that probability and causation are different things (Gillies, 2000; Hesslow, 1976; Hitchcock, 2010). Causes may indeed raise probabilities of effects, but that is because causes make things happen, not because making things happen and raising their probabilities are the same thing. This general objection may be motivated by various counterexamples, of which perhaps the most important are chance-lowering causes.

Chance-lowering causes reduce the probability of their effects, but nonetheless cause them (Dowe & Noordhof, 2004; Hitchcock, 2004). Taking birth control pills reduces the probability of pregnancy. But it is not always a cause of non-pregnancy. Suppose that, as it happens, reproductive cycles are the cause. Or suppose that there is an illness causing the lack of pregnancy. Or suppose a man takes the pills. In such cases, provided the probability of pregnancy is not already zero, the pill may reduce the probability of pregnancy (albeit slightly), while the cause may be something else. In another well-worn example, a golfer slices a ball which veers off the course, strikes a tree, and bounces in for a hole in one. Slicing the ball lowered the probability of a hole in one but nonetheless caused it. Many attempts to deal with chance-lowering causes have been made, but none has secured general acceptance.

5. Ontological Stances

Ontological questions concern the nature of causation, meaning, in a phrase that is perhaps equally obscure, the kind of thing it is. Typically, ontological views of causation seek not only to explain the ontological status for its own sake, but to incorporate causation into a favored ontological framework.